Professional illustration about Container

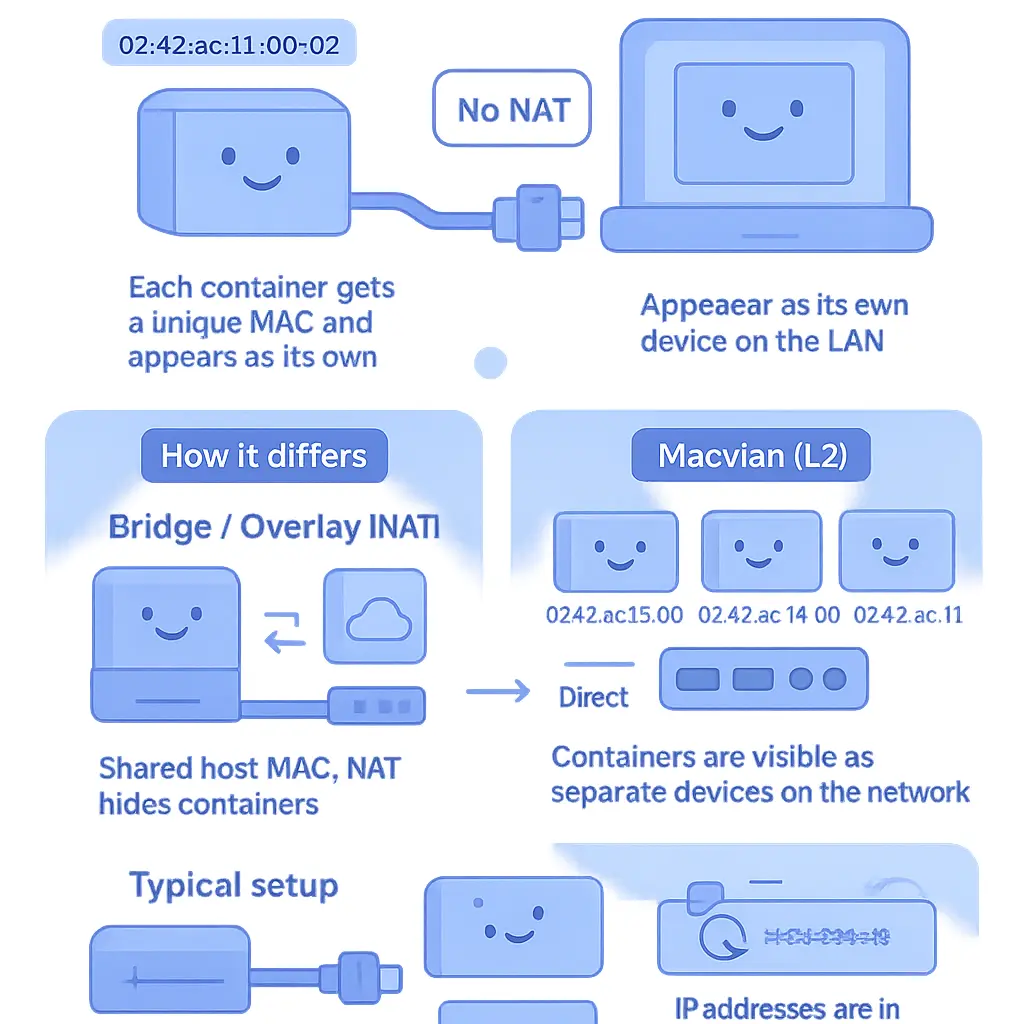

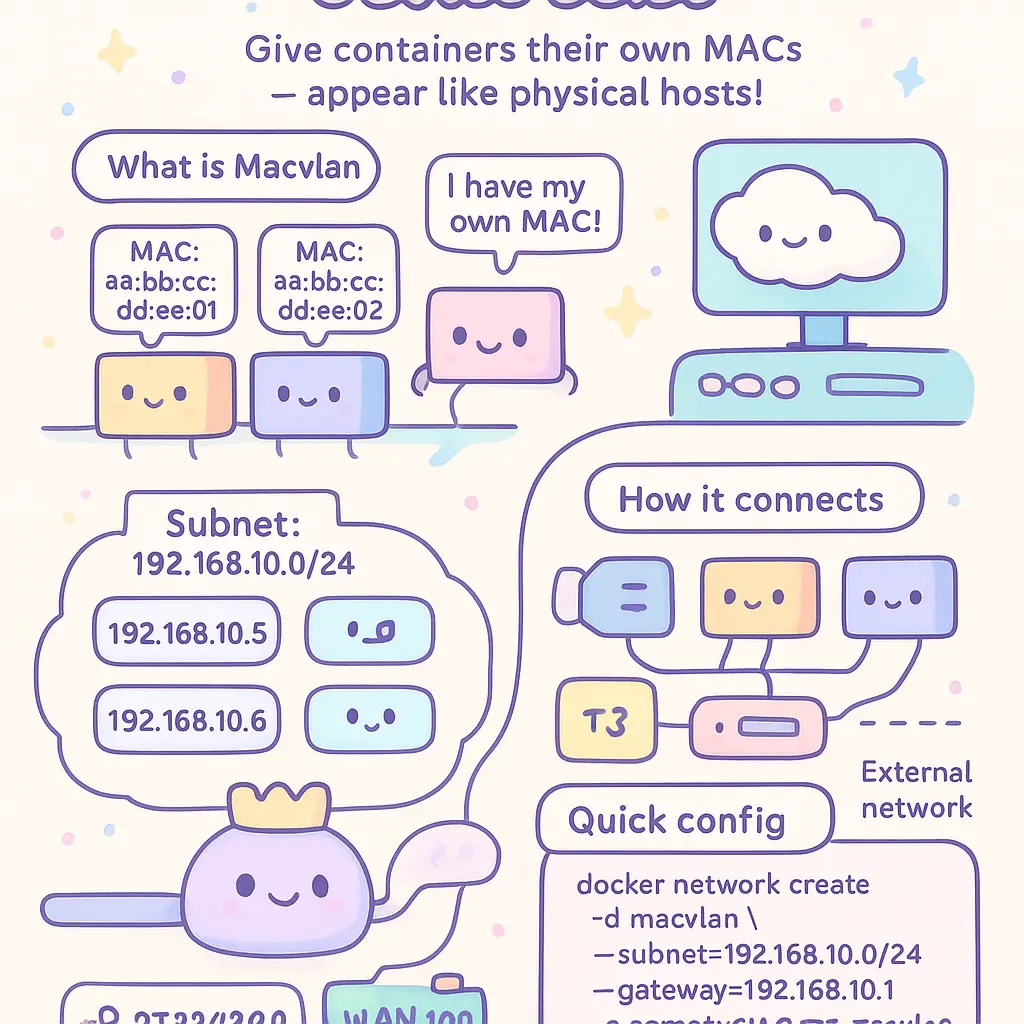

What Is Macvlan Networking

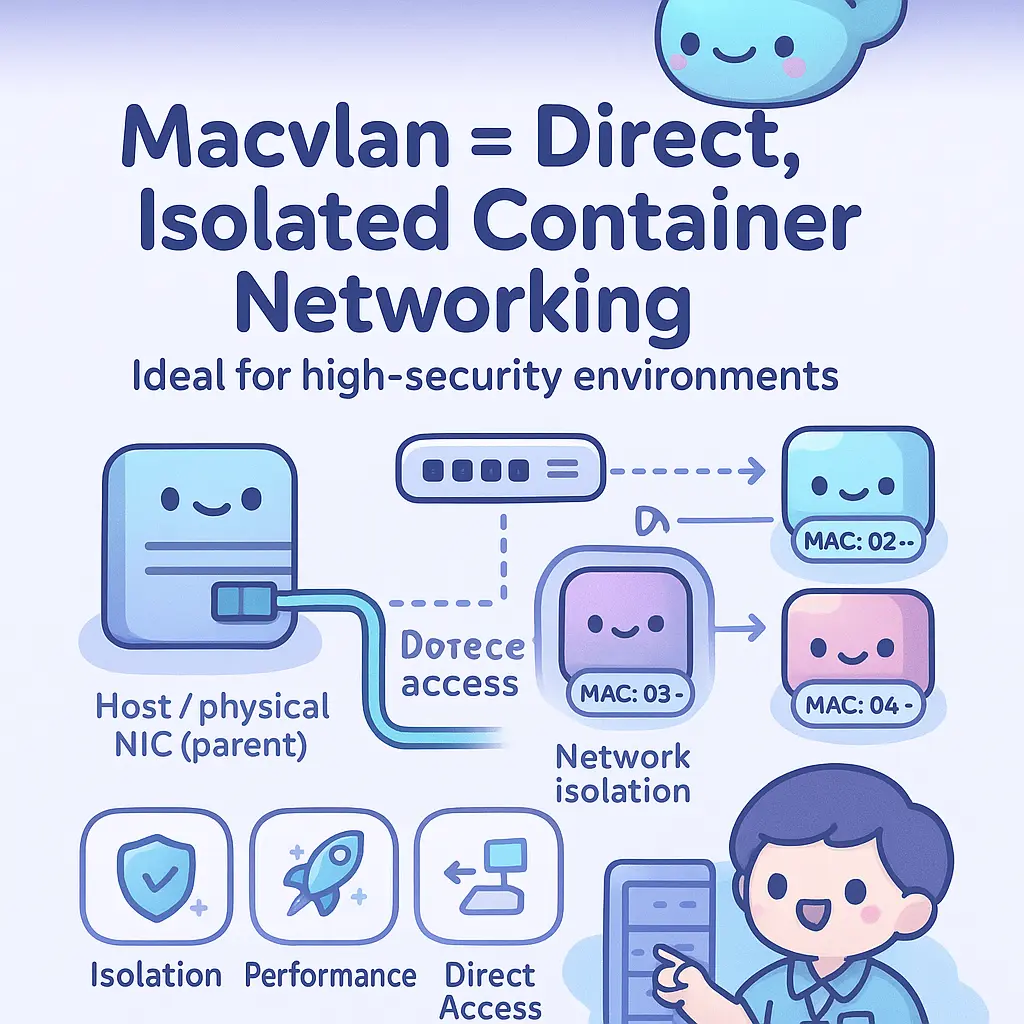

Macvlan networking is a specialized Docker and Linux Kernel feature that allows containers to have their own unique MAC addresses and appear as physical devices directly connected to the network. Unlike the traditional bridge network or overlay driver, which typically use Network Address Translation (NAT) and isolate containers behind the Docker Host’s IP, MacVLAN assigns containers IP addresses from the same subnet as the host, allowing them to communicate with external devices such as network switches, routers, and other servers on the physical network, just like any other host. This approach provides enhanced network isolation and segmentation, as each container can be treated as an individual endpoint at the network layer, which is particularly useful in environments where strict security policies or advanced network troubleshooting is required.

One of the main advantages of Macvlan networking is its ability to bypass the limitations of Docker networking modes like bridge mode and overlay networks, both of which can complicate container-to-container communication and introduce performance overhead. With Macvlan, each container can participate directly in VLAN trunking and 802.1Q tagging, making it easier to integrate with enterprise network infrastructure that relies on VLANs for network segmentation and security. This is especially valuable in data centers or virtualized environments (such as when using VirtualBox or advanced CNI plugins in Kubernetes) where network provisioning, monitoring, and management need to align with physical network designs. Macvlan can also leverage promiscuous mode on the parent interface, allowing containers to receive traffic not specifically addressed to their assigned MAC, which is essential for scenarios like network monitoring or running intrusion detection systems inside containers.

Configuring Macvlan involves creating a parent interface—usually a physical network interface on the Docker Host—and defining one or more subnets that containers can use. Administrators can set up Macvlan in bridge mode, where containers communicate with each other and with external devices, or in private mode, which restricts communication to external endpoints only, enhancing container network isolation. Macvlan’s flexibility in subnet configuration makes it ideal for situations where overlapping subnets are not acceptable, or when containers need to be statically assigned IP addresses to meet compliance requirements.

In production environments, Macvlan networking is a popular choice for deploying applications that require direct access to the underlying network, such as legacy apps, network appliances, or workloads that demand high throughput with minimal latency. For example, in a Docker Swarm deployment, Macvlan enables each service replica to be reachable from the outside world with its own unique IP, thereby simplifying service discovery and load balancing without relying on complex NAT rules or overlay driver configurations. Similarly, in microservices architectures, Macvlan facilitates seamless container communication across different hosts by aligning container network interfaces with the physical network infrastructure.

However, using Macvlan presents specific network security and troubleshooting considerations. Since containers are exposed directly to the physical network, traditional firewalls and access lists must be carefully managed to prevent unauthorized access. Network monitoring tools must also be adapted to track traffic at the container level, as containers now act similarly to independent devices on the subnet. In environments with strict network policies, administrators can leverage Macvlan’s support for Network Namespace isolation to ensure each container’s network stack is fully separated, reducing the risk of cross-container attacks or misconfigurations.

Ultimately, Macvlan networking is an advanced tool in the container network configuration toolkit, offering unmatched flexibility and performance for scenarios where containers must operate as first-class citizens on the enterprise network. When combined with robust network interface configuration, monitoring, and provisioning practices, Macvlan enables organizations to build highly secure, segmented, and high-performance containerized environments that fully leverage the capabilities of modern network infrastructure.

Professional illustration about Docker

Macvlan Use Cases 2026

In 2026, Macvlan has cemented its place as a go-to solution for advanced Docker networking scenarios, especially when organizations need fine-grained container network isolation and direct access to the underlying physical network. One of the most common use cases is in high-security environments, where each container must have its own unique MAC and IP address within a subnet—this is critical for compliance and robust network segmentation. For example, financial services and healthcare companies are increasingly deploying microservices with Macvlan to satisfy regulatory requirements for network isolation and auditable traffic flows, leveraging Macvlan’s separation at the network layer far beyond what bridge mode or typical Docker overlay drivers can provide.

Another prevalent scenario in 2026 involves integrating containers directly with existing enterprise networks. By attaching containers to the parent’s physical network interface (such as eth0), each container appears as a native device on the LAN, streamlining tasks like network monitoring, troubleshooting, and device discovery. IT teams rely on this setup for deploying legacy applications inside containers, allowing seamless interaction with external services, network switches, and hardware appliances that do not recognize Docker’s traditional NAT or bridge network techniques. In these cases, Macvlan bypasses the Linux kernel’s NAT, eliminating the complexity and latency of multiple network translations, and supports scenarios requiring VLAN trunking or 802.1Q tagging, which are fundamental in modern cloud data centers for dynamic subnet configuration and policy enforcement.

Macvlan also shines in multi-tenant environments, such as managed Kubernetes clusters on-premises or in hybrid clouds, where strict container network isolation is necessary. By assigning containers to different VLANs using Macvlan in bridge mode or CNI plugins, network administrators can enforce boundaries between tenants or environments (like staging vs. production) using hardware network policies and firewalls, not just software rules. For instance, a SaaS provider running separate customer workloads can leverage Macvlan to map each tenant’s containers to distinct VLANs, ensuring data privacy and compliance with industry standards.

Gateway configuration with Macvlan remains a nuanced task as of 2026. When containers need outbound internet or cross-subnet connectivity, a specialized Docker Host acts as a lightweight gateway, bridging containers’ traffic to the broader network. This setup is especially relevant in IoT and edge deployments, where containers must communicate with external devices or cloud services while still being recognized as individual network endpoints. The flexibility of Macvlan in such scenarios enables custom routing, advanced firewalling, and even network-based service discovery, providing capabilities that surpass the default Docker networking stack.

For large-scale Docker Swarm or VirtualBox-based development labs, Macvlan is increasingly used to simulate real-world networking conditions. Developers and QA engineers can create network namespaces that mimic production topologies, test complex network provisioning routines, and evaluate network security policies under realistic constraints, all without the overhead of full VM virtualization. When combined with promiscuous mode on the host interface, Macvlan enables powerful packet capture and network analysis for debugging, making it a favorite for network troubleshooting and auditing.

Finally, in the realm of service discovery and container communication, Macvlan offers a straightforward path for containers to interact with external services or legacy systems that expect direct, routable IP/MAC addresses—without the indirection or overhead of overlay or bridge networks. This direct connectivity is invaluable for industrial automation, telecom, and scientific computing, where deterministic latency and uncompromised network interface configuration are non-negotiable.

In essence, the 2026 landscape sees Macvlan adopted not just for its traditional strengths in isolation and direct network integration, but as a foundational piece for secure, flexible, and highly-performant containerized environments—especially where network address translation is undesirable or where virtual network interface management must closely mimic physical infrastructure. Whether in tightly regulated industries, hybrid cloud architectures, or specialized development and testing setups, Macvlan delivers the granular control and native networking experience modern infrastructures demand.

Professional illustration about Gateway

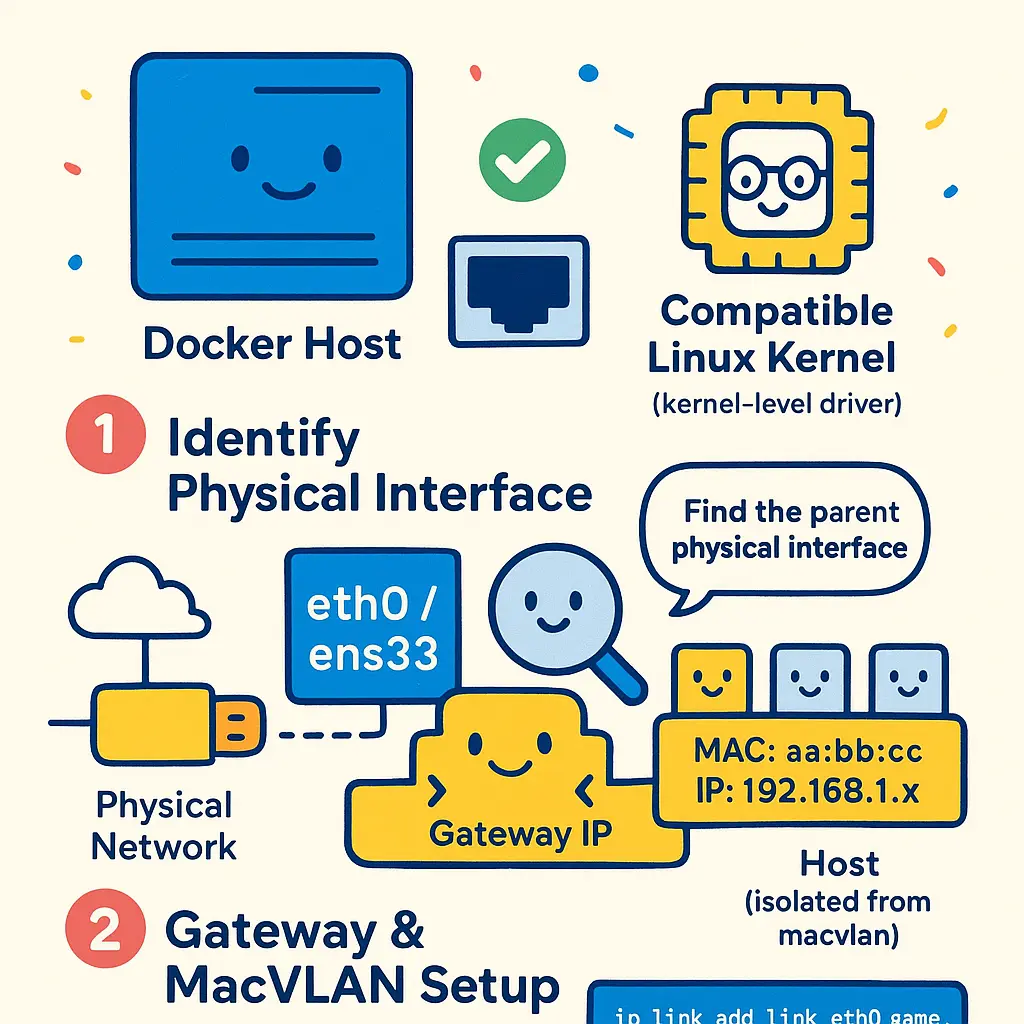

Setting Up Macvlan Step-by-Step

To set up Macvlan step-by-step in a modern Docker networking environment (2026), start by preparing your Docker Host with a compatible Linux Kernel, since MacVLAN is a kernel-level network driver. The process begins by identifying the physical network interface (commonly eth0 or ens33) that connects your host to the network switch. This interface will act as the parent interface for your Macvlan configuration, effectively bridging your container network to the external Layer 2 network segment. Ensure your network infrastructure supports VLANs and 802.1Q tagging if you require VLAN trunking for network segmentation.

First, review your subnet configuration and plan your container IP allocation. For effective network isolation and security, use a unique subnet that does not overlap with your host or other bridge networks. For instance, define a dedicated subnet like 192.168.100.0/24 for Macvlan containers. This segmentation allows you to implement strong network security policies and explicitly control container communication with other devices.

Next, create a Macvlan network using the Docker CLI. When specifying network details, assign the parent interface and select either bridge mode (the default, ideal for connecting containers to the physical network) or 802.1Q VLAN mode for advanced trunking scenarios. If your setup involves tools like Docker Swarm or Kubernetes with CNI plugins, ensure MacVLAN driver compatibility and network policies are properly applied for multi-host container deployments.

After network creation, configure network interface parameters such as the gateway, subnet, and optional VLAN ID. For most environments, the gateway should point to your physical network’s existing router, allowing containers to reach external resources without double network address translation (NAT). If your organization requires promiscuous mode on the parent interface (some VirtualBox or cloud environments might), make sure your hypervisor and host OS support it—otherwise, containers may not receive traffic as expected.

Once the Macvlan network is set up, create your containers and attach them to this network. Each container will receive a unique IP address from your specified subnet and appear as a separate device on the physical network switch. This configuration can be verified using network monitoring tools—check ARP tables, traffic flows, and run basic connectivity tests to ensure containers are accessible from your LAN and vice versa. Leveraging Macvlan enables container network isolation without overhead from an overlay driver, and also simplifies network troubleshooting since containers act as first-class citizens at the network layer.

If you’re operating in a virtualized environment (like VirtualBox), double-check your virtual NIC settings and host network configuration to ensure seamless packet forwarding. Network interface misconfiguration or missing bridge mode can prevent container connectivity. For advanced scenarios, integrate Macvlan with network provisioning and automation tools to streamline the process, such as using Ansible or Terraform to manage dynamic parent interface assignments or subnet allocations.

Keep in mind common pitfalls during setup: overlapping subnets between Macvlan and host bridge networks can lead to unpredictable connectivity issues; insufficient network segmentation may expose containers to unnecessary risks; and missing VLAN configurations on upstream switches can block traffic. Regularly validate your configuration by reviewing container network configuration parameters and monitoring for rogue devices or unexpected traffic flows.

By following these steps and leveraging modern container networking practices, you can achieve highly efficient, segmented, and secure Macvlan deployments that scale into complex production environments. Integrate with overlay solutions or multi-host orchestrators like Docker Swarm only after you confirm single-host networking is solid and conforms to your organization’s broader network provisioning and security standards.

Professional illustration about Interface

Macvlan vs Bridge Mode

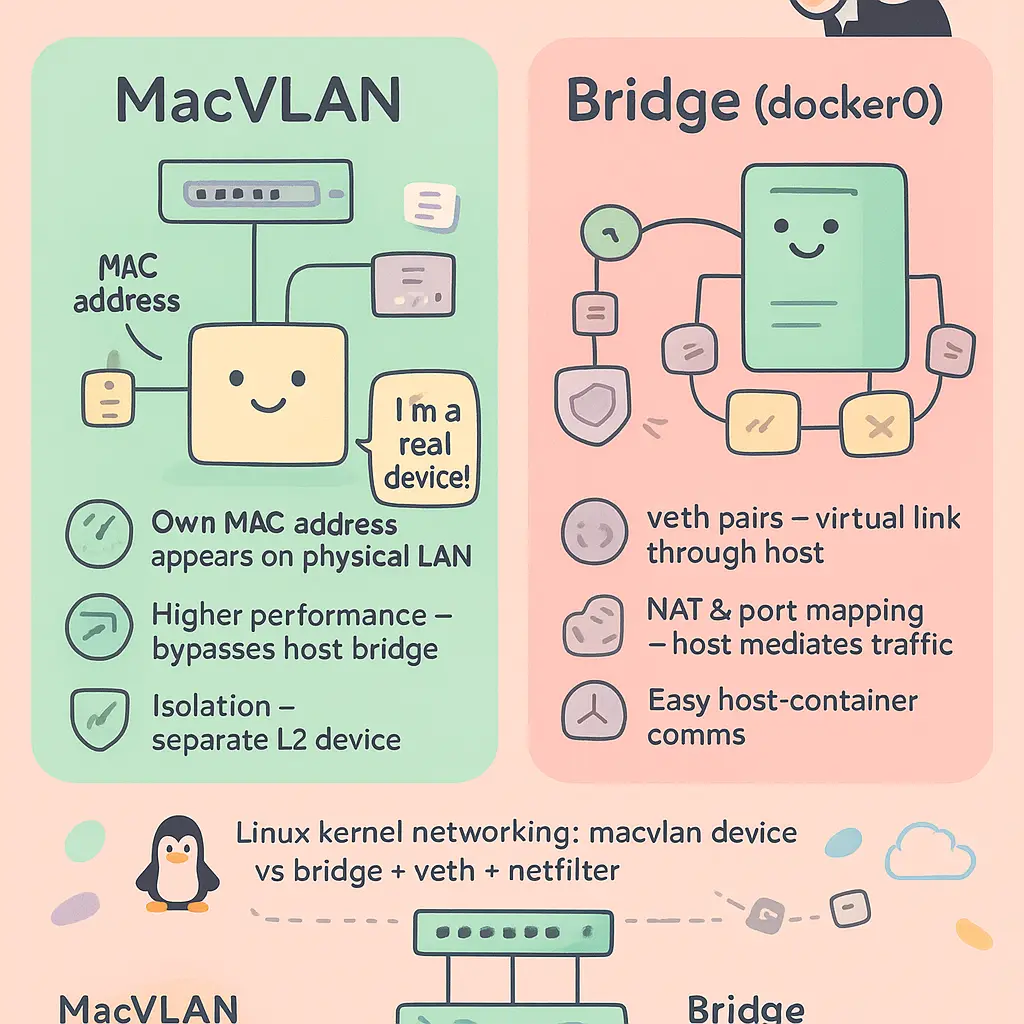

When comparing MacVLAN and Bridge mode in container networks—especially in modern Docker and containerized environments—it's crucial to understand the practical differences in how each mode interacts with the host system, the Linux kernel, and the wider infrastructure like physical network switches and gateways. Both modes aim to enable container network communication, but the way they handle network isolation, subnet configuration, and integration with the host network interface can dramatically impact your deployment’s security, scalability, and troubleshooting complexity.

Bridge mode is the default Docker networking driver, utilizing the bridge network created by Docker on the Docker Host. In this configuration, containers attach to a user-defined or default bridge, sharing the host’s network namespace while being isolated from the external network. All traffic flows through a virtual bridge inside the host machine, with network address translation (NAT) used to map container traffic to the host’s interface. This means that, by default, containers can communicate with each other within the same bridge network, but outbound connections to the external network require NAT. In Bridge mode, container communication with external systems is straightforward, but containers are typically not directly addressable from the wider network without configuring port forwarding or additional routing. This model simplifies network provisioning and supports most general-purpose container deployments, but can limit network isolation and make network monitoring more challenging, as all traffic is funneled through the bridge and subjected to NAT.

In contrast, MacVLAN mode assigns a unique MAC address to each container and connects it directly to the physical network interface of the Docker host, bypassing the NAT layer. Containers become first-class citizens on the physical network: they appear as independent devices at Layer 2 of the network layer, complete with their own IP addresses within the assigned subnet. This enables direct communication between containers and any other devices on the same VLAN or subnet, without the need for port mapping or translation. With MacVLAN, you can implement sophisticated VLAN trunking and network segmentation strategies using 802.1Q tagging, often used in enterprise environments where multiple isolated networks share the same physical infrastructure. Moreover, MacVLAN enhances network performance because it omits overhead associated with the bridge and NAT, delivering near-native network throughput—something especially relevant for high-performance applications or services that require network isolation and minimal latency.

However, MacVLAN does come with limitations. It requires that the parent interface on the host be in promiscuous mode to accept frames destined for container MAC addresses. Depending on your security or infrastructure policies, this may not always be desirable or even allowed. Also, containers configured with MacVLAN generally cannot communicate with the host interface or with containers running under bridge mode by default, unless advanced CNI plugins or routing rules are introduced. This can complicate network troubleshooting and system monitoring, especially in hybrid deployments or when integrating with overlay drivers and orchestrators like Docker Swarm. Additionally, MacVLAN is not supported on all virtualization platforms or cloud environments—for example, VirtualBox and some public cloud platforms may restrict direct access to the host’s physical network interface.

When deciding between MacVLAN and Bridge mode, consider the nature of your container network configuration. If you need containers to behave like independent hosts on the network—such as for legacy applications, integration with existing DHCP infrastructure, or when strict network segmentation is required—then MacVLAN provides unmatched flexibility but at the cost of greater setup complexity and infrastructure dependencies. On the other hand, if you prioritize ease of management, compatibility with overlay networks, and straightforward container network isolation, Bridge mode remains the go-to solution for most Docker networking scenarios. Enterprises often combine both approaches, using Bridge mode for routine deployments and MacVLAN for specialized workloads requiring direct Layer 2 access or advanced VLAN support.

Best practices for 2026 include leveraging enhanced CNI plugins for greater flexibility, employing network monitoring tools to gain insight into both MacVLAN and bridge-attached containers, and maintaining up-to-date documentation on your network interface configuration and subnet provisioning strategies. In environments where network security is paramount, always review the implications of exposing containers directly to the physical network, regardless of the mode you choose.

Professional illustration about Subnet

Docker Macvlan Configuration

To configure Docker’s Macvlan driver effectively in 2026, it’s essential to understand how this networking mode allows containers to have their own MAC addresses on the network just like physical machines. This setup is especially valuable when you want your containers to appear as separate hosts directly on the network layer, making them individually reachable by other devices on the same subnet. When you set up a Docker Macvlan network, you must pay close attention to several crucial components—physical network interface, VLAN configuration, subnet allocation, and proper integration with your existing network infrastructure, including any 802.1Q-enabled switches or network segmentation requirements.

A practical configuration typically starts with choosing a parent interface—this is the network interface on the Docker host that physically connects to your network switch. In a Linux environment, you’ll select this parent interface (for example, eth0 or ens192), and configure it to support VLAN trunking if you need to provide access across multiple VLANs. The Macvlan driver can be configured in either ‘bridge’ mode or ‘802.1Q’ mode depending on your requirements. Bridge mode is the most straightforward, allowing containers to communicate with external systems but not with other containers attached to the same Macvlan interface (because of how Linux Kernel enforces network isolation at this layer). For inter-container communication within the same network namespace, you might need to set up an additional Bridge Network or experiment with Overlay Driver options provided by Docker Swarm or CNI plugins.

When configuring the Macvlan network, it’s essential to allocate a unique subnet that matches your network provisioning plan—commonly a /24 or larger. Specify a Gateway address that doesn’t collide with your Docker Host or other infrastructure nodes; this Gateway will route traffic between the subnet and the broader LAN. You’ll also need to assign IP ranges to ensure each container receives a unique IP within this subnet, supporting both network monitoring and security policies. If your switch ports operate in promiscuous mode, enable this setting on your host network interface to allow the Macvlan containers’ MAC addresses and frames to be correctly routed. In enterprise-grade deployments, integrating Macvlan networks with VLANs via 802.1Q sub-interfaces boosts network segmentation, so each container or group of containers can be placed on dedicated VLANs for enhanced security or compliance with regulatory standards.

A common challenge is container-to-host and cross-container communication. By design, MacVLAN isolates containers from the Docker host; containers can’t directly reach the host unless you bridge their interfaces or use a secondary Bridge Network. For environments where you need both external connectivity and host-level management, consider combining Macvlan for external access with conventional Bridge networks internally, or using Overlay Networks when operating in clustered environments like Docker Swarm. Troubleshooting these setups often involves examining the host’s network interface configuration, confirming that no IP conflicts exist, and ensuring that DHCP is disabled for statically assigned IPs to avoid address overlap. Advanced organizations might further utilize VirtualBox test environments or staged subnets for network troubleshooting prior to deployment in production.

The move towards container network isolation and segmentation using Docker Macvlan is accelerating, especially as businesses seek to eliminate network address translation (NAT) bottlenecks and adopt microservices at scale. In 2026, Docker’s improved integration with CNI and native support for advanced VLAN trunking means you can design container networks that mirror complex physical topologies while maintaining robust network security and monitoring. Remember, each Macvlan network you create should be planned with future scalability in mind, favoring consistent nomenclature, clear subnet partitioning, and regular network interface audits to avoid accidental broadcast storms or rogue configuration errors. Ultimately, the best Docker Macvlan configuration is one that carefully balances network performance, isolation requirements, and the administrative overhead of maintaining physical-to-virtual mappings in both cloud and on-premises scenarios.

Professional illustration about 未知實體

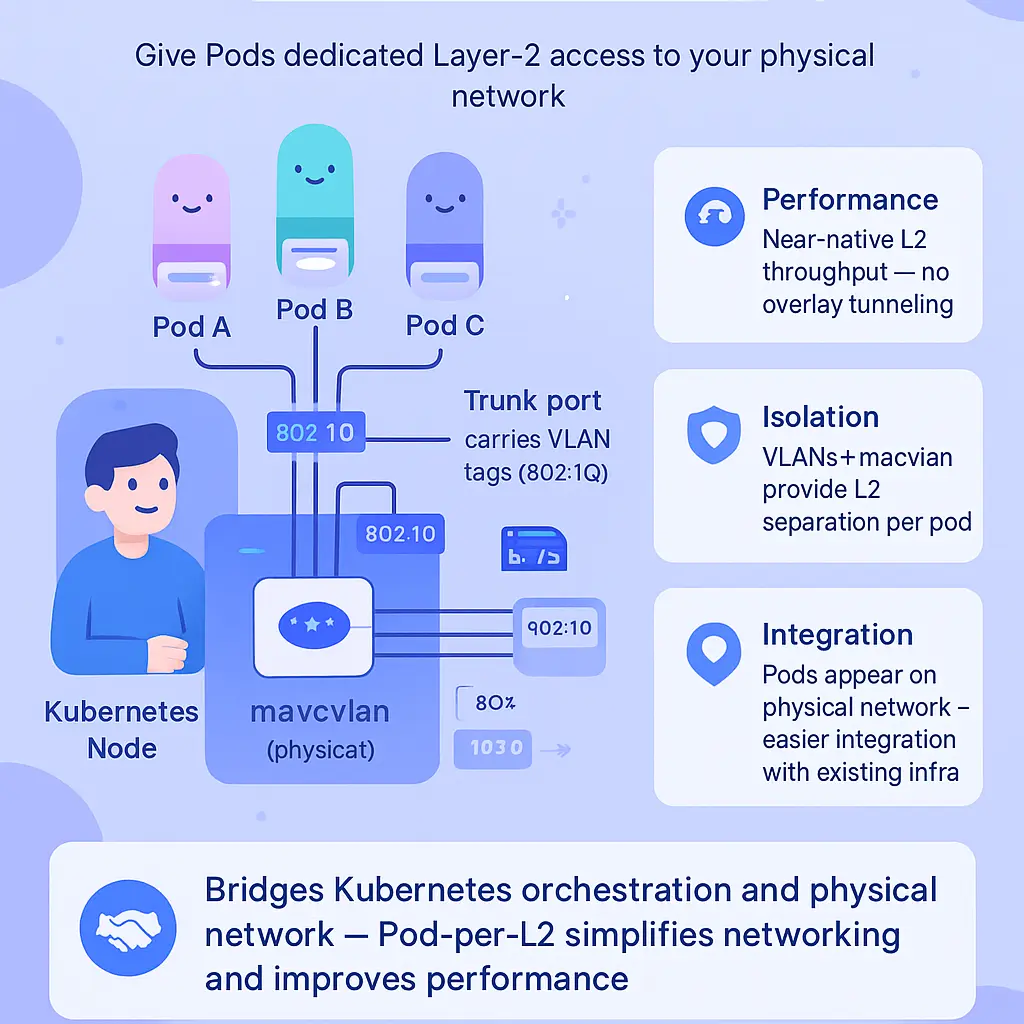

Kubernetes Macvlan Integration

When talking about integrating MacVLAN into Kubernetes environments in 2026, you’re essentially bridging the gap between Kubernetes’ orchestrated container deployments and your physical network infrastructure. The Kubernetes Macvlan integration allows Pods to obtain dedicated Layer 2 network access via unique MAC addresses, bypassing Kubernetes’ default overlay driver like Flannel or Calico. This opens up a wealth of possibilities for advanced network segmentation, direct container communication with external networks, and seamless integration with enterprise VLANs and network switches.

The underlying mechanics involve assigning multiple MAC addresses to the physical network interface of a Docker host (also called the parent interface). Each container or Pod is seen by the network switch as a distinct network endpoint, complete with its own MAC address. This contrasts starkly with Overlay Network approaches, where network address translation (NAT) typically hides container communication within a single host IP.

To operationalize Macvlan in Kubernetes, you’ll use Container Network Interface (CNI) plugins. The Macvlan CNI plugin has matured significantly and now fully supports features like 802.1Q VLAN trunking, subnet configuration, and integration with standard Linux kernel networking. Each Kubernetes Node’s network interface can be configured in Bridge mode, creating a Macvlan bridge network that’s mapped to a physical interface. With Bridge mode, you can efficiently connect Pods to the same subnet as your physical machines, ensuring low latency and direct network connectivity—key for workloads requiring high-throughput or when you’re dealing with legacy systems and network troubleshooting.

Consider a scenario: You have legacy apps running on physical servers in subnet 10.0.1.0/24, and you want your Kubernetes Pods to be first-class citizens on this network for network monitoring or direct database access. By configuring your Macvlan CNI to use the same subnet and specifying the gateway, each Pod gets a full-fledged network presence with its own IP, visible outside the cluster. This approach bypasses the need for overlay network solutions and complex routing rules, reducing latency and maximizing network throughput.

Of course, network security and isolation remain paramount. Macvlan provides network segmentation at Layer 2, but you still need to think carefully about access controls, especially in multi-tenant Kubernetes clusters. Employing VLAN trunking with 802.1Q-tagged traffic ensures that each business unit or environment (like dev, test, prod) gets its own isolated virtual network interface, further improving network isolation and container network configuration. This design is fully supported by modern network switches, and with promiscuous mode on the parent interface, the Macvlan bridge can capture and forward traffic efficiently.

One practical recommendation for 2026 Kubernetes deployments is to leverage network provisioning automation via tools like Ansible or Terraform with up-to-date Macvlan CNI configurations. This not only simplifies network interface configuration, subnet provisioning, and VLAN setup, but also allows you to rapidly roll out consistent networking policies across your nodes. When integrating with virtualization platforms like VirtualBox or hybrid stacks combining Docker Swarm and Kubernetes, careful planning is necessary to ensure unique MAC address assignments and to avoid conflicts or network address translation issues.

From a troubleshooting standpoint, the visibility offered by Macvlan is unmatched. Because workloads are presented as individual physical hosts from the switch’s perspective, traditional network monitoring tools (such as SNMP or NetFlow) can directly capture Pod traffic, dramatically simplifying network troubleshooting and analysis. Plus, since all container traffic travels over the physical network, standard firewall appliances and security controls retain full efficacy.

Ultimately, the Kubernetes Macvlan integration in 2026 stands as the go-to solution for network engineers demanding granular control over network layer traffic, precise network isolation, and scalable, production-grade networking. Whether you’re dealing with high-security microservices, IoT gateways, or large-scale analytics clusters, this approach brings your container networking closer than ever to bare-metal performance without sacrificing the flexibility of Kubernetes orchestration.

Professional illustration about Network

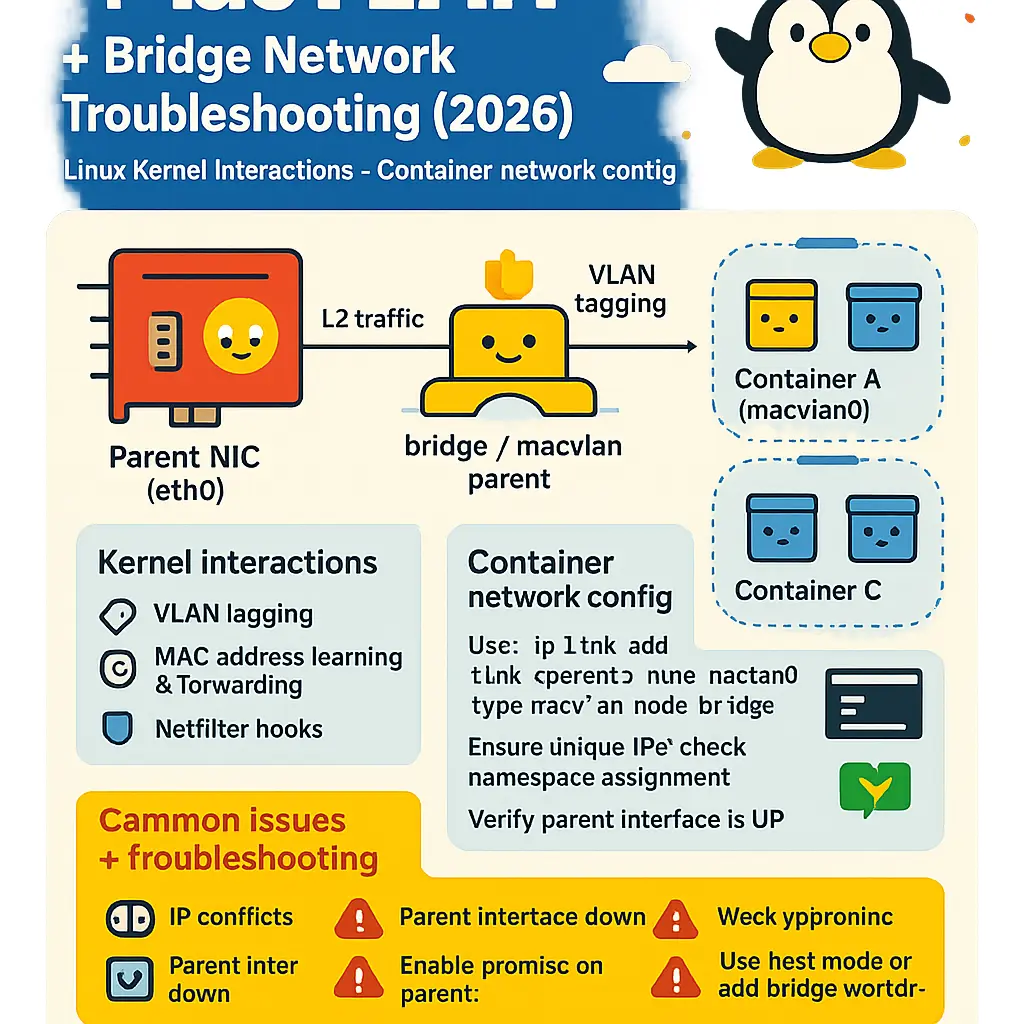

Troubleshooting Macvlan Issues

When it comes to troubleshooting MacVLAN challenges in your Docker or containerized environment as of 2026, understanding both Linux Kernel interactions and Container network configuration is crucial. Common MacVLAN issues often stem from misunderstandings about how physical network interfaces and Virtual network interfaces are bridged, or from misconfigurations related to the parent interface and the underlying network switch. For example, if your Container struggles to communicate outside its host, it’s prime time to check whether your parent interface is set properly on the Docker Host and whether promiscuous mode is enabled; most Network Switches now, especially with the latest 802.1Q and VLAN trunking standards, require parent interfaces to support and advertise these features.

Always make sure the Subnet assigned to your MacVLAN Network doesn’t overlap with existing interfaces—Subnet configuration errors can break both network isolation and segmentation, undermining Network security best practices. If you’re running multiple MacVLAN configurations on VirtualBox for testing, make sure the virtualized Bridge Network does not clash with your physical network, as Network Layer conflicts here often result in dropped packets or containers becoming invisible. For environments leveraging CNI plugins or orchestrating with Docker Swarm, persistent connectivity problems may be due to the CNI settings overriding your MacVLAN interface, or Swarm networking interfering with direct MacVLAN mappings—so review orchestration-level settings too.

Another frequent pain point is that MacVLANs do not support Container-to-Container communication by default when attached to the same Docker Host. This limitation, which is deeply rooted in how the Linux Kernel segregates Network Namespaces and handles Network address translation, means troubleshooting intra-host communication needs a different angle. You might consider falling back to Bridge mode or combining Overlay network drivers for specific Container pairs requiring cross-talk. When facing seemingly random connectivity or performance issues, use tools like network monitoring or packet sniffers at both parent interfaces and inside containers. These can help identify dropped frames, incorrect MTU settings, or problems at the VLAN trunking level—especially relevant in modern multi-tenant environments or when layering MacVLAN over high-density overlay networks.

In practice, it’s not uncommon for the physical network interface or switch-side port to have security policies that block unknown MAC addresses, a hazard for dynamical Container creation via MacVLAN. Always coordinate with your network team to ensure any port security or MAC filtering policies play well with ephemeral Container network provisioning. On the Docker side, check your MacVLAN device’s mode—bridge, private, or VEPA—as each behaves differently in terms of Container communication possibilities. If your deployments run inside nested virtualization platforms like VirtualBox, add-ons and host-only adapters can introduce hidden network translation or NAT that adds another layer of complexity.

Finally, advanced troubleshooting may involve inspecting the Linux Kernel’s network logs, watching for errors connected to the Overlay Driver or issues initializing Bridge networks. Sometimes, upgrading the container runtime or kernel patches is necessary, especially as recent updates have improved compatibility with 802.1Q tagged VLANs and custom Network interface configurations. In summary, moving methodically through parent interface setup, Subnet assignments, network switch configurations, and container orchestrator settings—and validating with active network monitoring—are your best strategies for diagnosing MacVLAN networking hitches in complex, containerized infrastructures.

Professional illustration about Bridge

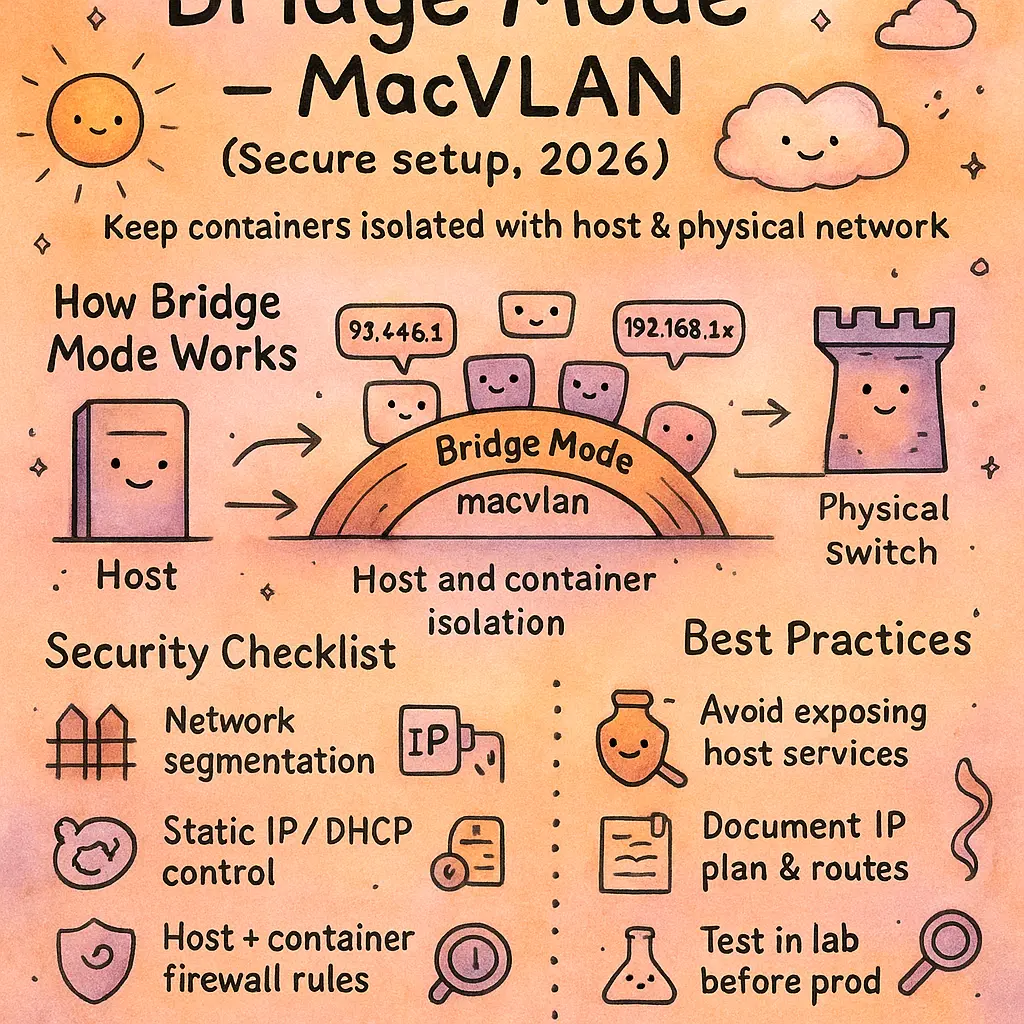

Macvlan Security Best Practices

Securing a MacVLAN network environment in 2026 requires both attention to detail and a practical understanding of how containers integrate with host and physical infrastructure. To start, individuals deploying MacVLAN interfaces on their Docker Hosts should pay close attention to proper network segmentation. By isolating subnets and using VLAN trunking with standardized 802.1Q tagging on your network switch and parent physical network interfaces, you help ensure that containerized traffic remains discreet and well-structured. This technique simplifies network segmentation, making malicious lateral movement much more difficult.

Another cornerstone involves configuring strict network policies at the bridge, network namespace, and container level. Using the Linux Kernel’s native capabilities and tools like CNI plugins, cluster operators can enforce rules that limit east-west traffic between Containers on the same network or different MacVLAN subnets. If leveraging Docker Swarm, always enable encrypted overlay networks in combination with MacVLAN drivers. While MacVLAN functions at the network layer and can bypass Docker’s default NAT and Bridge mode isolation, it’s vital to pair it with monitoring solutions capable of inspecting both inbound and outbound traffic on each MacVLAN interface. For instance, integrating real-time network monitoring into Docker networking lets you detect unexpected broadcasts that might indicate misconfigured or rogue containers.

Fine-grained network provisioning also supports security by assigning each container an explicit IP address within documented ranges, reducing ambiguity in network troubleshooting. Always validate that the assigned subnet is not shared with other services outside the intended container ecosystem. For critical applications, enforce Promiscuous mode controls on parent interfaces, preventing containers or the Docker host from unduly capturing all packets traversing the switch port, which could otherwise make sensitive data visible to unintended listeners.

It’s crucial to adopt container network isolation mechanisms on top of MacVLAN by using tight firewall policies within both the Docker host and your broader IT infrastructure. For example, using the Linux Kernel’s nftables or iptables tools, you can fine-tune traffic rules to allow only specific protocols and ports between network namespaces, containers, and VirtualBox VMs, if deployed in hybrid environments. When designing your container communication paths, consider leveraging private subnets that aren’t directly reachable from external VLAN segments, further guarding your workloads.

MacVLAN security should also be enhanced through effective management of the gateway and overlay network compatibility. Gateways connecting container subnets to the outside world should have minimal exposure and robust logging, and any deployment combining Overlay Driver and MacVLAN interfaces must account for possible exposure at bridge network boundaries. Administrators should routinely perform network interface configuration checks to audit which devices and namespaces have MacVLAN auxiliary addresses, to ensure decommissioned containers don’t leave open backdoors.

Practical examples of best practices include creating unique parent interfaces on each host for MacVLAN containers and monitoring their associated tags, keeping Docker Hosts updated to the latest supported release, and using sysctl tuning to disable unwanted IPv6 or IP forwarding if not essential for that namespace. Network Layer visibility is further improved by employing tools that tie interface statistics to individual container logs, so anomaly detection is strengthened.

Lastly, routine security reviews should extend to analyzing both bridge and overlay network integrations and validating endpoint registrations for all networked containers. Maintaining accurate asset inventories of each MacVLAN adapter, VLAN assignments, and correlated switch port configurations is key to establishing an end-to-end traceable network transaction history and quickly pinpointing issues in network troubleshooting scenarios. Adopting such layered and contextual security safeguards ensures that your 2026 containerized workloads run safely and in alignment with modern network security mandates.

Professional illustration about CNI

Performance of Macvlan Networks

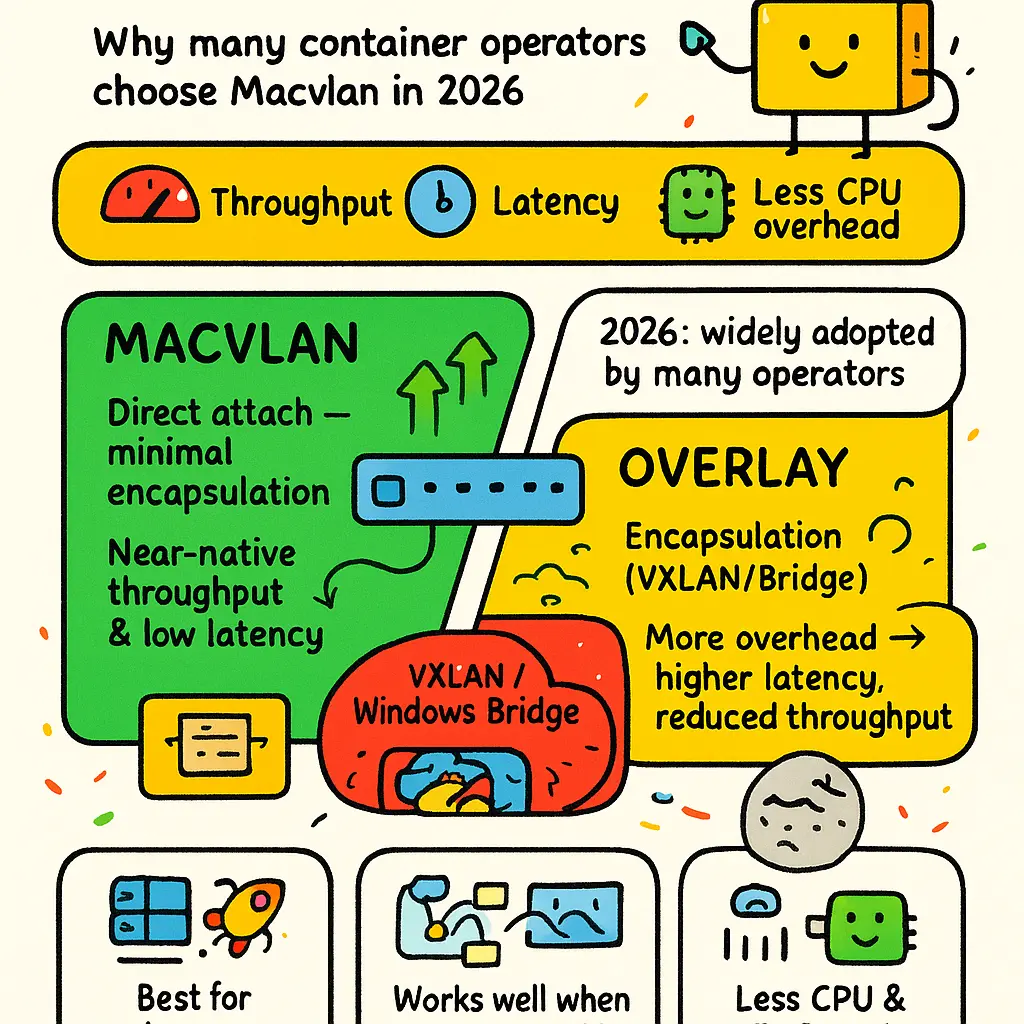

When it comes to the performance of Macvlan networks, many container operators in 2026 choose this driver for its ability to deliver near-native throughput and latency. Unlike overlay drivers such as VXLAN or the Windows Bridge, which encapsulate network traffic and often introduce performance overhead, MacVLAN connects containers directly to the physical network interface of the Docker host. This architecture allows packets leaving the container’s network namespace to bypass typical kernel network paths, increasing efficiency and minimizing latency, which is particularly advantageous for high-throughput, low-latency workloads like real-time analytics or streaming servers. As a result, traffic flows through the Linux kernel faster than with more complex overlay solutions because there’s no packet encapsulation or extra network address translation.

What sets MacVLAN apart is its direct integration with the underlying 802.1Q VLAN tagging standard and Native VLAN trunking on physical switches, letting containers participate in traditional on-premises network segmentation strategies. For instance, each container in a Kubernetes cluster or Docker Swarm can be mapped to a unique MAC address on the wire, communicating with upstream routers and switches as if they were independent physical devices. Thanks to this model, network isolation and traffic control can be enforced at the hardware layer, utilizing standard switch ACLs, without overloading the Docker host’s IP stack. Large environments leveraging advanced network monitoring and enterprise security tools also benefit because traffic inspection and control happen at the switch, much like traditional servers.

However, maximizing Macvlan performance does require careful network interface configuration on the host. Since the driver connects each container as a separate endpoint on the parent interface, you must ensure the physical or virtual NIC—whether an actual port or a VirtualBox bridge on a test environment—supports promiscuous mode. Without enabling promiscuous mode, the Linux kernel may silently drop packets destined for mapped container MAC addresses, resulting in intermittent communication issues and complicated network troubleshooting scenarios. Additionally, MacVLAN networks do not support inter-container communication on the same host by default, a level of container network isolation that boosts security but complicates some distributed applications. Solutions typically involve augmenting the architecture with a dedicated gateway or one-hop bridge, which can reintroduce minimal overhead—but nothing like overlay drivers that double-encapsulate traffic.

When designing container network configurations at the network layer, consider the subdivision of your subnet. With Macvlan, every container needs a unique IP/MAC combination for direct connectivity; this requirement can rapidly exhaust available addresses on small subnets. Planning scalable subnet configuration strategies is thus crucial, whether working in private datacenters, on public clouds offering physical or bare-metal interfaces, or building hybrid deployments utilizing VLAN trunking.

In benchmarking studies conducted by platform engineers and validated on popular enterprise distributions, isolated Macvlan networks on Docker consistently deliver throughput very close—often within 2-3%—of the baseline achieved by native bridge networks on the same host with identical hardware. That said, although Macvlan outpaces overlay network drivers and virtual NAT solutions in raw packet velocity and reduced jitter, its scalability is tied directly to the host NIC’s capacity and the size of directly routable IP pools. As you scale out containerized workloads with CNI Macvlan plugins on tools like Kubernetes or OpenShift, be aware that network switches must be provisioned to recognize and handle potentially thousands of dynamic MAC addresses. Some lower-end switches have finite ARP/MAC tables, possibly affecting container communication reliability or causing hard-to-diagnose failures.

Power users and cloud architects should evaluate not just single-hop performance, but also management considerations: If network segmentation or zero-trust microsegmentation is mandated, Macvlan can increase visibility for network security analysts yet shift isolation responsibility back to platform-level ACLs and routing policies. Tools for network provisioning and monitoring, like next-gen NMS solutions, must be updated to accurately classify traffic originating from hundreds or thousands of ephemeral containerized endpoints.

In summary, for organizations prioritizing peak Ethernet performance—especially when deploying databases, in-memory caches, or APIs handling bursty traffic—adopting Macvlan with best-practices in network layer overlays, interface provisioning, and switch configuration can yield outstanding returns, provided you remain proactive with network troubleshooting and keep an eye on the operational limitations around network isolation and switch resource constraints.

Professional illustration about Docker

Macvlan for IoT Devices

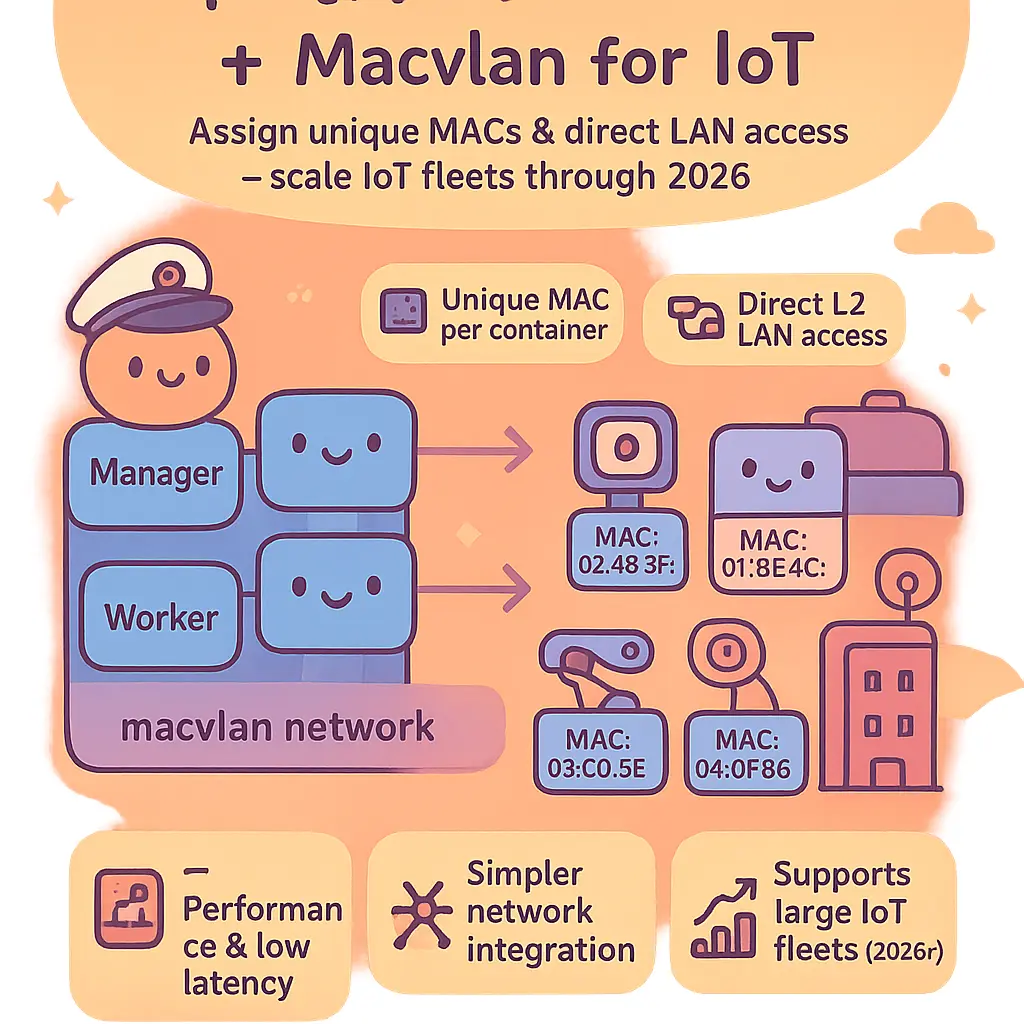

Deploying Macvlan networking for IoT devices within Docker environments has become highly beneficial as companies roll out larger fleets of connected sensors and actuators through 2026. By enabling each containerized IoT application or device simulator to be assigned a unique MAC address and direct presence on the network, MacVLAN effectively lets these containers behave just like physical IoT devices as viewed from the network infrastructure. Administrators can, for instance, tie Docker or Podman containers running on a powerful edge Gateway or VirtualBox-based lab to production-like testing scenarios, where each container acts as an individual device on the subnet. This approach supports robust network segmentation and highly granular network isolation—critical for IoT applications at massive scale, where mixed traffic types, varying security demands, and the need for per-device network monitoring are paramount.

Unlike typical Bridge networks where Network Address Translation (NAT) masks containers behind the Docker Host's IP stack, a MacVLAN network presents containers as first-class citizens on the same Layer 2 broadcast domain as real, physical networked devices. This is a game changer for IoT, as networked sensors or controllers built around 802.1Q VLAN trunking or custom protocols often need direct Ethernet access—without interference from overlay drivers or NAT policies. Utilizing MacVLAN, organizations configure the physical network interface (for example, eth0) of their Linux Kernel-based gateways as the parent interface, then create a MacVLAN bridge mode subinterface. Each assigned subnet IP and automatically allocated unique MAC is presented to the external network switch, facilitating true Layer 2 adjacency. This simplifies tasks such as device discovery, mDNS/Bonjour integration, or automated subnet provisioning across industrial networks and commercial IoT deployments.

As the demand for network segmentation and fault isolation keeps rising—especially in smart building, campus IoT, and OT (Operational Technology) environments—integrating MacVLAN with mainstream container orchestration (like Docker Swarm or advanced CNI plugins) matters more than ever in 2026. Through bridge and transparent MacVLAN modes, containers running on different physical interfaces (for failover, bandwidth, or geography) can even participate in multiple VLANs, much like physical hardware using 802.1Q tagging. Configuring proper network interface and subnet mapping ensures that, should an IoT container behave unexpectedly or require detailed troubleshooting, network administrators can pinpoint compromised devices, reroute traffic at the network switch level, and initiate rapid analytics using common network monitoring tools.

Practical deployments often leverage robust container network isolation strategies, critical for both device and gateway security. For example, imagine fleets of IoT cameras running as lightweight containers. Assigning each channel a static subnet and unified VLAN means you can restrict east-west traffic, only permitting the bare minimum for device control APIs, which helps guard against lateral movement if a single container gets breached. Similarly, promiscuous mode deployment of the network interface allows detailed packet inspection across isolated segments—vital for identifying anomalies, shadow devices, or compliance breaches in real time. Integrators combining overlay and MacVLAN network solutions can position certain IoT containers on overlay networks for cloud relays or hybrid edge computing, while keeping VLAN-segmented latency-sensitive devices local, minimizing risk and resource contention.

One smart tip: always ensure parent interface allocation, address pools, interface names, and VLAN subinterfaces are well documented and consistent—especially across Docker hosts, VirtualBox lab deployments, and production gateways. As management and scale requirements grow, these discipline practices support seamless network provisioning and troubleshooting. With support maturing through Linux Network Namespace mechanisms and new developments in CNI plugins for 2026, it's possible to spin up hundreds or thousands of IoT device containers on a single physical edge server while maintaining true network-layer segmentation, without legacy NAT compromise or bridge network bottlenecks. This direct subsequence means technical teams benefit from simplified firewall rules, smoother firmware updates, easier device registration, and a much clearer path to regulatory compliance around IoT network segmentation and visibility.

By understanding not only how MacVLAN integrates with Docker networking, but also with switches, VLAN trunks, subnet configuration, and overlay drivers, engineers now have unprecedented flexibility in designing secure, scalable, and maintainable networks for their growing IoT fleets. This ensures that every smart light, industrial sensor, gateway, or application communicates seamlessly while retaining the highest possible standards of network security and segmentation in today’s highly regulated, highly connected environments.

Professional illustration about Docker

Macvlan VLAN Support Explained

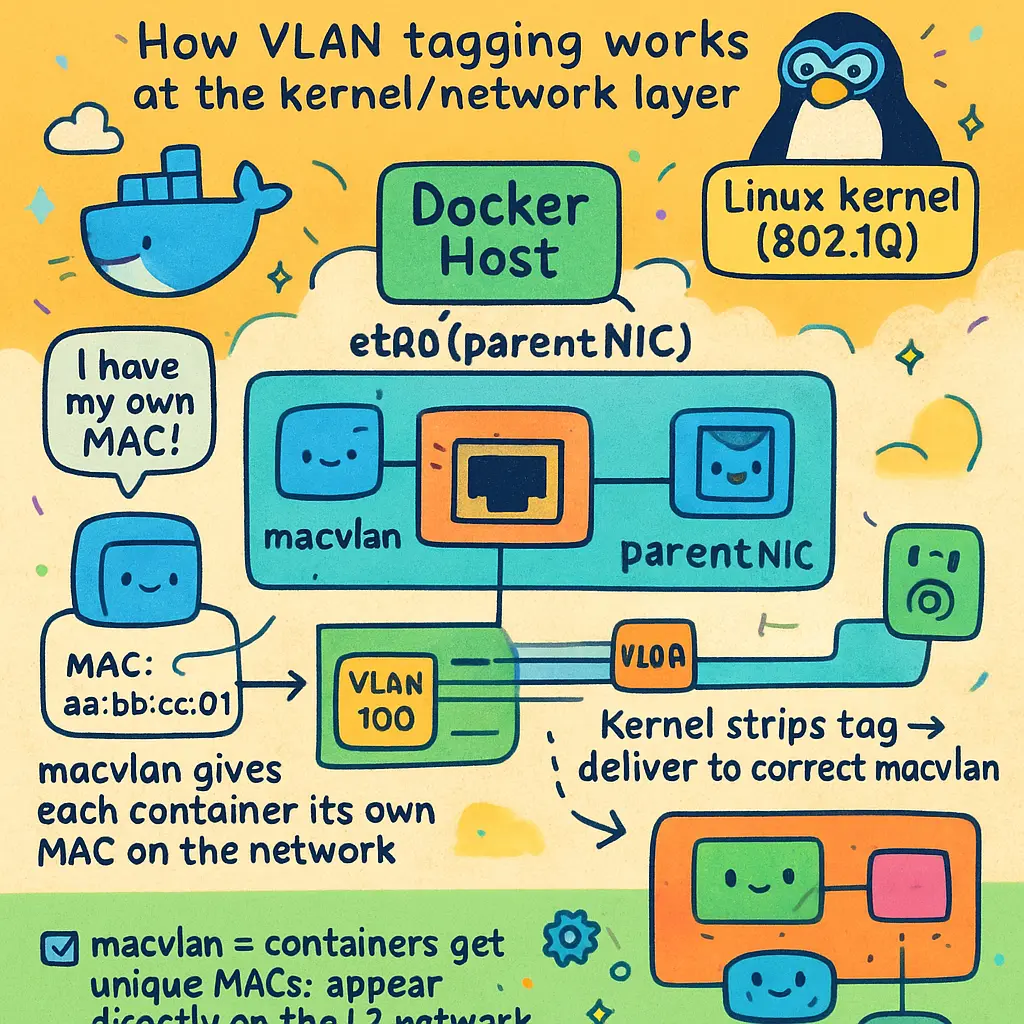

A common question among platform engineers and advanced developers exploring Docker networking in 2026 is: How does macvlan handle VLAN support, and what actually happens at the network layer when VLAN tagging is in play? The answer revolves around the Linux kernel's built-in support of 802.1Q VLAN tagging and the flexibility macvlan networks bring to containerized stacks.

Unlike overlay drivers that tunnel communication between container endpoints, MacVLAN networks give containers their own separate Layer 2 MAC addresses, which allows traffic to flow onto the physical network as if containers were standalone devices. When you use macvlan in your container host — whether on bare-metal systems, VirtualBox instances, or as part of a Docker Swarm cluster — the parent interface is essentially your hotline to the real network switch or VLAN trunk. By assigning a VLAN ID to your macvlan configuration, you instruct Docker and the host’s CNI plugins to isolate container subnets within specific L2 segments.

Let's break it down by example. Say your company runs multiple application microservices that must be totally separated at the network level: finance systems and development test beds should never communicate. Instead of relying on basic bridge network isolation, macvlan VLANs give you deep segmentation. You define a parent interface in promiscuous mode; often eth0, ens160, or any physical NIC, then specify a VLAN tag (ex: 802.1Q tag 20 for production; tag 30 for development). The parent interface becomes the trunk, supporting the parallel traffic streams courtesy of your network switch-provider. Because macvlan embeds the VLAN configuration into each network namespace, you have native support for secure network isolation, nearly on par with what classic VM setups achieve in 2026.

When troubleshooting container-to-container communication or setting up network traffic monitoring, VLAN support with macvlan is straightforward. Each container instance gets its own interface reminiscent of a physical NIC, with the corresponding VLAN tag applied at the kernel level. There’s no need for network address translation (NAT), and packets tagged by the container reach the Gateway or Docker Host’s interface with zero synthetic overlays, making performance predictable and deterministic. It's all visible to standard network troubleshooting and monitoring workflows (like tcpdump, Wireshark, and advanced APM tools).

Best practices in 2026 for VLAN-enabled macvlan include matching your container network configuration with the physical infrastructure: confirm the Docker Host is plugged into a network switch supporting trunking, verify parent interface configs (Linux ifconfig or ip link tools), and ensure your Docker networking stack matches what the network security framework specifies for VLAN segmentation. Fusion with third-party CNIs is standard—extra controls let you provision ephemeral or persistent container networks scoped precisely by subnet and VLAN needs.

As enterprise platforms shift loads between bare-metal and VM provisioning—especially with Docker Swarm and self-hosted clusters replacing parts of Kubernetes—consistency of macvlan VLAN handling matters. The Linux kernel evolves, but the fundamentals of network interfaces and VLANs don’t change: containers can ride directly atop 802.1Q trunks, with practical and secure boundaries defined at Layer 2. Smart utilization means your network engineers gain reliable network isolation and segmentation, while DevOps teams roll out lightweight, production-like testbeds at will.

Professional illustration about Kernel

Macvlan Limitations and Solutions

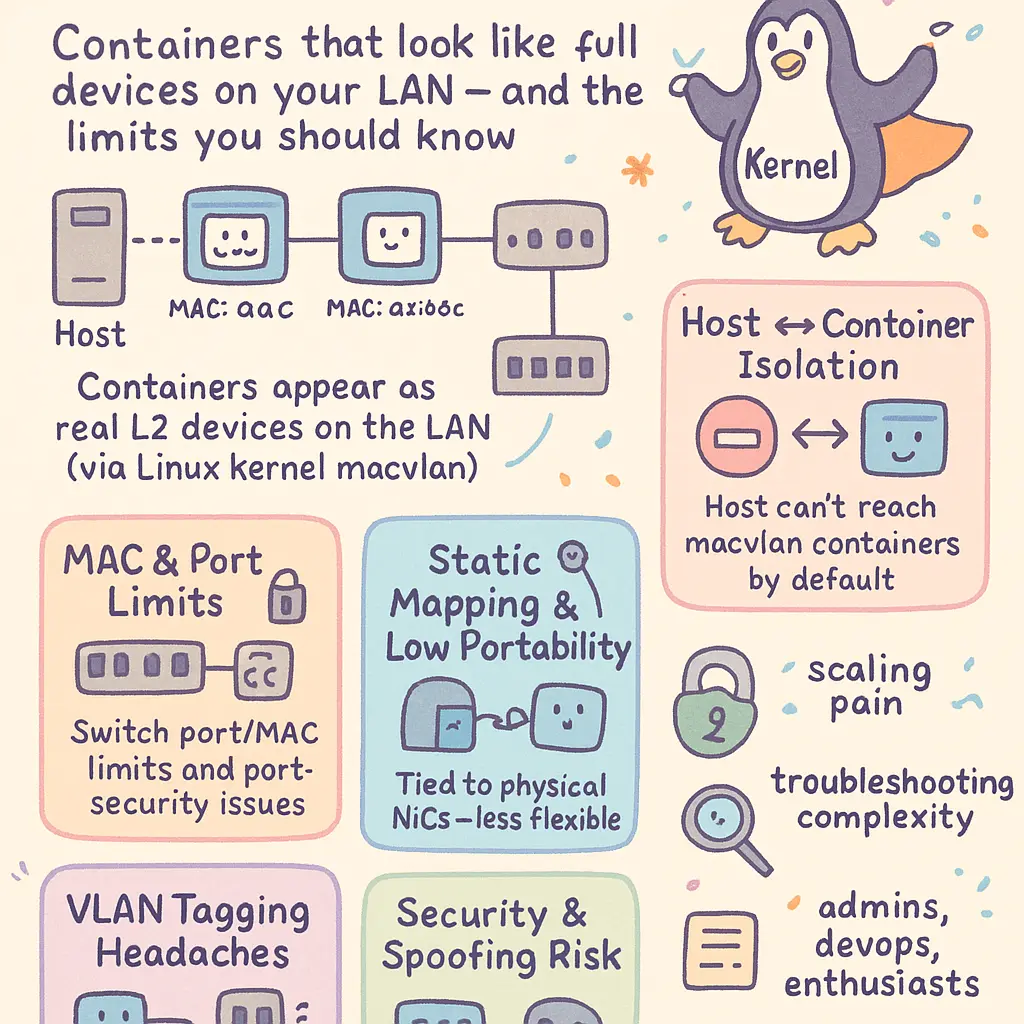

MacVLAN is a powerful Docker networking solution, widely adopted for scenarios where containers must appear as full-blown devices on a Layer 2 LAN, but this static approach inherently comes with several key limitations that corporate admins, devops engineers, and even advanced enthusiasts encounter. One immediate challenge is network isolation: since every container attached through MacVLAN gets its own unique MAC address and operates almost as a parallel host on the physical LAN, containers linked to the same MacVLAN network cannot communicate directly if they share a parent physical network interface—unless you introduce a secondary bridge or isolate them across unique subnets. This complicates container communication and subnet configuration, particularly in multi-tenant environments or implementations running on a single Docker Host.

Moreover, running large microservices architectures built with Docker Swarm or Kubernetes's CNI plugins triggers scaling constraints, because the network subsystem has to administrate hordes of virtual MAC addresses—often pushing the limits on available IPv4 addresses within a predefined subnet or overworking the DHCP settings on your network switch or router. Unlike an overlay driver, which tunnels container traffic and delivers network segmentation under the hood, MacVLAN relies on direct exposure to the layer-2 fabric, making rapid-scale deployments across different layers and overlays slightly tricky.

Another inherent pitfall comes in hybrid and virtual test beds, especially in VirtualBox or similar virtualized environments, where MacVLAN may simply not work or pose instability due either to its dependency on the physical network interface or the virtualization solution's incapability in passing MAC addresses transparently. Typically, MacVLAN requires the parent interface to be placed in Promiscuous mode, something most hypervisors and even certain commodity network switches lock down to maintain broadcast integrity or network security standards.

Working within complex VLAN configurations (including 802.1Q VLAN tag scenarios with VLAN trunking), users often struggle to maintain concise network isolation between production and dev containers. Misconfiguration here could unintentionally bridge production services with dev containers if subnetting is not granularly enforced, muddying compliance around network security or PCI DSS rules.

Addressing these limiations requires a blend of architectural shifts and creative problem-solving:

Multi-Parent Setup: By distributing containers over multiple physical or logical parent NICs, network namespace isolation is maintained for intraservice comms, minimizing forced network address translation and excessive bridge networks. It allows containers to communicate locally without necessitating awkward static routes. Bridge Networks for Local Host Communication: When seamless inter-container communication or bridge mode configurations are essential, leverage both Bridge Network and MacVLAN in tandem, mapping traffic via short loopbacks in the Linux Kernel. Though this introduces minor complexity in container network configuration, it elegantly bypasses MacVLAN host communication deadlocks. Network Monitoring and Troubleshooting: Keep continuous checks on network provisioning. Advanced observability using SNMP sub-agents or GC log shipping for CNI ensures that sudden broadcast flooding or Docker host misWiring gets caught early—especially on heavily automation-reliant networks. Hybridizing Networking Plugins: Consider maximizing strengths by combo-using MacVLAN strictly for single-tenant high-throughput workloads or environments where network layer isolation is paramount, utilizing overlay networks or Bridge mode for environments demanding high container count or seamless failover properties. The Overlay Driver and standard bridge mode retain convenient features like service discovery in Docker Swarm. Linux Kernel and Network Switch Management: Configure your managed network switch and upgrade host NIC drivers whenever possible. Enforcement of up-to-date firmware in combination with niche broadcasting or isolation strategies in the Linux Kernel hardening will head off quirks in complex static routing involving hybrid VLAN or multiple subnet overlays. Fine-tuned Subnet Segregation: Proactively carve out narrowly defined subnets for your MacVLAN allocation—don't just pull from legacy address pools. Proper VLAN ID assignment complete with Inter-VLAN ACLs molds solid network segmentation rules for cleaner container data movement.

Realistically, achieving airtight Network Segmentation and robust network security in MacVLAN-based Docker environments in 2026 relies not only on understanding where limitations lurk, but on tailoring container network isolation strategies to the scale, compliance requirement, and network interface configuration peculiar to your shop. Each solution offers a trade-off: Overlay and Bridge networks trade a little raw speed for flexible, robust communication, while pure MacVLAN shines in high-performance, tightly-secured apps directly tethered to a segmented physical LAN or IoT sensor mesh. Always run layered Network Monitoring in tandem with defensive address assignment, emphasizing proactive Subnet Configuration and adjusting for potential edge-cases raised by Network Namespace handling and constant advancements in the Linux networking stack.

Professional illustration about MacVLAN

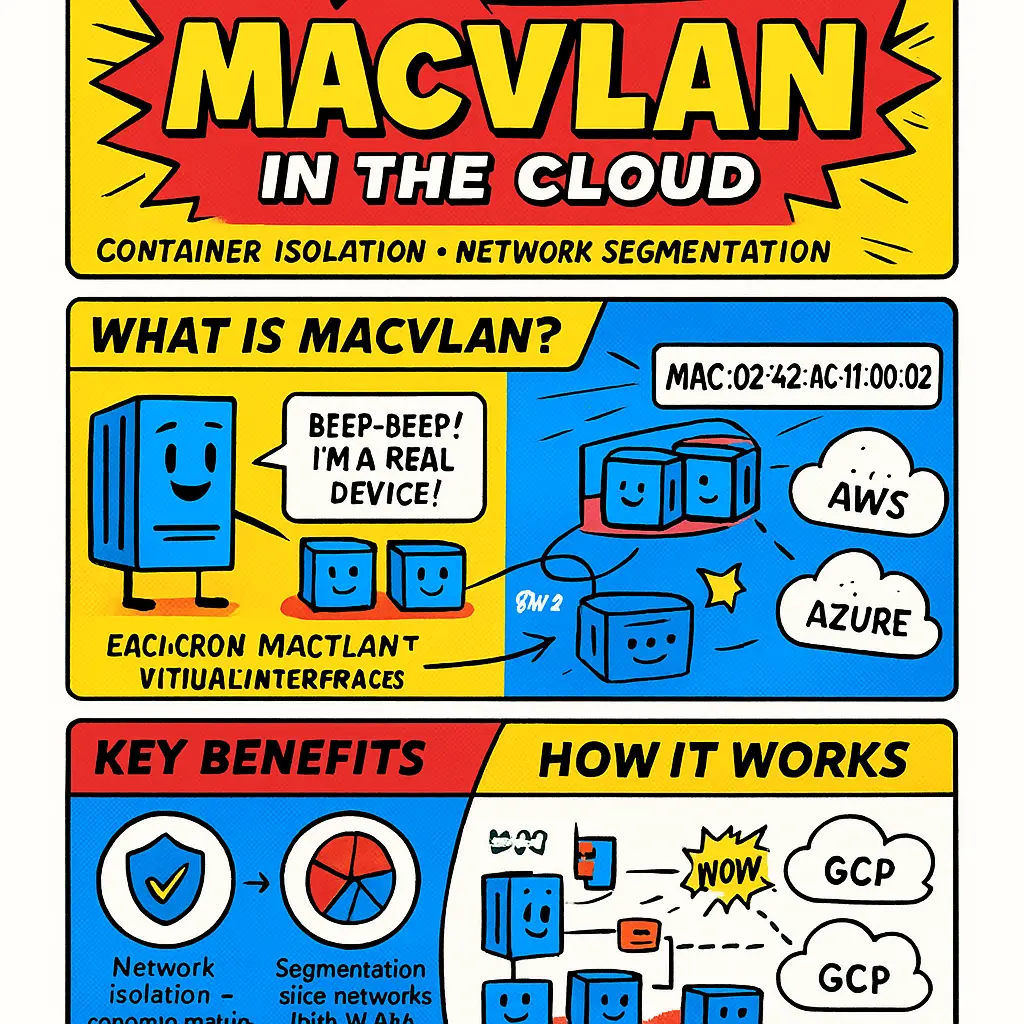

Macvlan in Cloud Environments

Managing MacVLAN in cloud environments has opened up advanced opportunities for Container network isolation, Network segmentation, and streamlined communication across distributed Docker hosts. Particularly with platforms such as AWS, Azure, and Google Cloud now heavily invested in native container networking solutions, incorporating MacVLAN within these virtual infrastructures requires not only a solid understanding of the foundational concepts but also an awareness of the newest integrations and operational patterns as of 2026. Typically, MacVLAN allows you to assign several virtual MAC addresses to a physical network interface (the Parent interface), enabling Containers running across Docker Swarms or Kubernetes clusters to own their own identity on the Network layer, unhampered by traditional bridge network complexity that sometimes leads to cross-container interference or noisy neighbor issues.

One practical scenario involves enterprises leveraging MacVLAN driver directly alongside CNI plugins to bridge Docker communication between high-security workloads and legacy app VMs. Tiered architectures within public cloud often rely on nested network namespaces for advanced Network address translation and the detection of lateral threats, making MacVLAN extremely valuable since each container gets isolated within both the Network Namespace and at the MAC address layer. For businesses focused on network security, integrating MacVLAN helps segregate Container traffic into very granular Subnets, working harmoniously with solutions like VLAN trunking and 802.1Q tagged switches frequently used in hybrid and multi-cloud architectures.

Connecting remote clusters proved notoriously difficult before MacVLAN’s maturity: configuring a universal Virtual network interface was the key to unlocking persistent, low-latency links across geographically diverse Docker Hosts. Steps might include assigning each Container a dedicated entry in the Physical network interface pool, setting the container network configuration to Bridge mode, and closely coordinating SNAT rules to allow just the monitored transfers in busy environments. Administrators also benefit from Promiscuous mode in Network monitoring, as they can focus logging and packet tracing for the statistically relevant VirtualBox instances or everything sitting outside the Overlay driver defined paths – a common challenge now in cloud-native security operations centers.

While cognitive friction previously hindered overlay networks – such as with Overlay driver configuration chaise or unnecessarily convoluted Bridge network routing – MacVLAN presents a more transparent mechanism for Network layer connectivity. Because MacVLAN propagates isolated Subnet configurations and gives Docker networking teams greater clarity around Device-to-Device logging flows, Network provisioning for reliable segment-based Microservices deployments gets easier. For example, instead of stretching a complex National Instruments routed overlay through Docker swarm managers positioned on ephemeral cloud VMs, a team may use MacVLAN attached to their cluster managers for direct Layer 2 Container communication and Kernel-level optimizations. Furthermore, on platforms leveraging the newest Linux Kernel innovations in active security filtering, containers employing MacVLAN can benefit from slightly lower CPU usage due to tight-interface binding, as opposed to tackling protocols rewritten at higher network abstraction tiers.

Of course, there are nuanced network troubleshooting caveats in modern cloud setups. Institutional examples shows teams employing VLAN trunking must carefully map Container Subnet configurations to actual cloud switch VLAN tags – accidental mismatches in physical isn’t uncommon with shared-tenancy cloud VLANs managed by third-party supporting their legacy enterprise applications but wrapped in Docker containers or VirtualBox environments. Likewise, as containers become increasingly ephemeral, using MacVLAN with Network isolation can't overcome Fault Domain boundaries unless correctly coordinated with cloud-native CNI drivers. Conduct multitier testing when bridging legacy Windows servers (sitting within VirtualBox hosts), MacVLAN-attached Linux microservices, and public cloud-exposed Docker Swarm clusters in one sittings—the logs and per-container throughput you observe over an hours-long marathon dramatically show analytics and trend data the business operations require in 2026 to support customer growth.

In summary, when building flexible, scalable network topologies in cloud environments, integrating MacVLAN networks offers several key advantages for container communication, improved container network isolation, strident network security, and segmenting throughput by granular device-specific subnets. By championing network interface configuration best practices for cloud-native applications, DevOps teams can capture real-time statistical detail hundreds of containers dynamically switching between host geo-zones or microsegments enforced by 802.1Q tagging. From persistent Network troubleshooting instruments like custom SNMP polling layouts to streaming container heartbeat log collection – MacVLAN networks supply a tangible bridge that links the vast physical and virtual landscape of modern enterprise cloud.

Professional illustration about Namespace

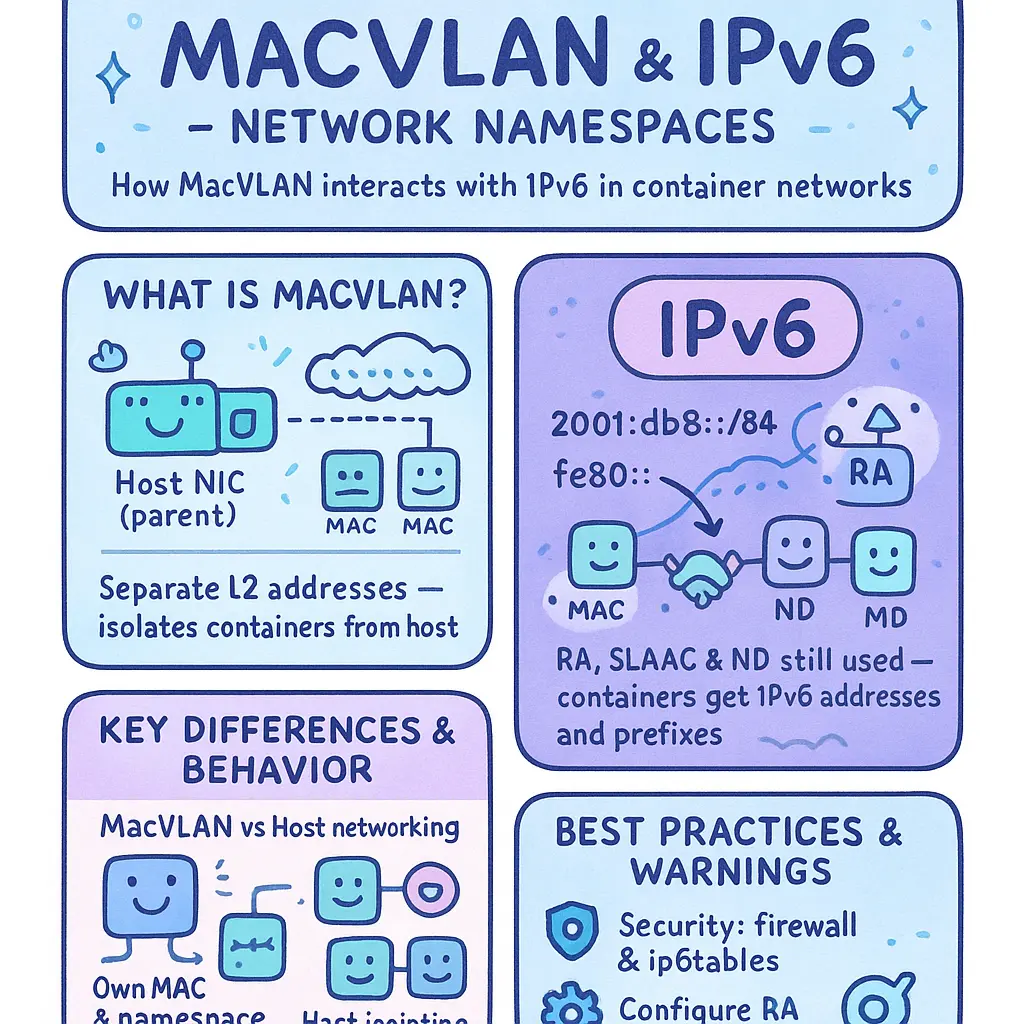

Macvlan and IPv6 Compatibility

When deploying Containers with MacVLAN network mode on Docker or within other CNI-based orchestration frameworks like Docker Swarm, understanding MacVLAN's interaction with IPv6 is vital for configuring reliable and secure communication in modern network architectures. The fundamental difference between IPv4 and IPv6—particularly the link-local nature, device addressing scopes, and the broader addressing of subnets—can sometimes introduce technical challenges or limitations in how containers utilize their network interfaces.

MacVLAN essentially allows each container to appear on the physical (or virtual) network switch with its own MAC address, responding as an isolated host directly at the network layer, as opposed to the bridge network creation which masks containers behind one physical interface. This model can be highly effective for network segmentation, intensive network monitoring, or specific security requirements, especially in environments where network troubleshooting and traffic analysis on a per-container basis are priorities. However, integrating IPv6 support necessitates additional steps, mostly centered around basics such as enabling IPv6 forwarding inside the parent interface—be it Ethernet or derived through VirtualBox-hosted VM setups—and carefully configuring subnet allocation.

On Linux Kernel v5.x and above—categorically widely adopted now in 2026—IPv6 operations support advanced network namespace ingress filtering and optimized neighbor discovery, which are fundamental to keeping MacVLAN + IPv6 links stable. Deploying dual-stack settings (IPv4 and IPv6 co-existence) requires assigning both IPv4 and IPv6 subnets at container initiation, aligning Docker host and bridge network with enforced IPv6 interface configuration. Easily all major Dockerers and Kubernetes distros support hands-off config using systemd-networkd and NetworkManager. Nonetheless, fixed IPv6 addressing inside a subnet defined in user-overrides guarantees more precise container network isolation and avoids erratic autoconfig behaviors—probably the biggest risk point shaping failed communications when segmentation or VLAN trunking is leveraged.

A frequent deployment technique is enabling Docker's experimental IPv6 support for persistent MacVLAN usage. Setting IPv6 true on the Docker daemon and specifying an IPv6 subnet block (like fd00::/64 or global unicast with SLAAC) inches containerized services into the IPv6 world, but this also means direct hooks from containers to the parent interface, something your internal physical network infra (gateway, switches with 802.1Q VLAN) or down the virtualization stack (VirtualBox or overlay network unification) still needs to recognize. It's recommended to avoid proxy NDP offloading unless using resin NAT at high scale, since containers set under MacVLAN don't do network address translation for outbound traffic; they're native network objects with unique Layer 2 identity.

Where most engineers run into trouble is with passing neighbor advertisements correctly between subnets attached by bridge mode (a parent Docker host handed multiple network interfaces bonded per distinct VLAN trunk) and holes opening if MAC pinning relates incorrectly across virtual switches. Adaptive resolution today includes activating supervisor firewall policies centrally where the promiscous mode isn't accidentally crossed by managing VMs hosted containers doing container network configuration simultaneously, driving stricter network security fronts and skipping conflicting ND (Neighbor Discovery) traffic inside naturally segmented subnets.

For a real-world example: Suppose you’re setting up MacVLAN containers inside a financial stack with Portfolio and Auditor Containers, each on a distinct 802.1Q VLAN within IPv6-specific subnets — one VLAN providing threat analysis exit and another laid shadow over reporting backend interfaces talking to a Docker Host fused to cable modem plus mobile LTE uplink. A sound routine here would be ensuring all network switches accept SLAAC advertisement interception at the trunk port level, syncing smartly with parent's link-local and utltimately bridging subnet IPv6 configs for audit trail logs scattered via every interface indirect on overlay driver. Furthermore, conducting container network PING6 checks during failover re-provisions roots out missing global routing declarations, usually the easiest place routing breaks off once the mix of bridge networks and direct overlay replacements pops in real-time expansion swings.

Key takeaways for enhancing IPv6 compatibility with MacVLAN as of 2026 boil down to the following practical strategies for admins and developers:

- Always preverify your gateway and Docker host’s physical network interface supports Multicast IPv6 traffic and SLAAC

- Set unique static, dual-stack subnet configuration per Container where priority container communication needs isolation against network snoop

- Regularly monitor segment traffic with advanced Linux Kernel based eBPF and neighborhood log annotations—especially relevant pursuing network provisioning automation

- Bolster VLAN trunking policies to marry virtual network interface and overlay network Daisy Chaining

- Document all parent interface configuration, specifically shifting network interfaces from managed to promiscous mode carefully for bridge network context

- Mind Docker networking layer limitations to overcome legacy Docker daemon interrupt scenarios coming length scaling flows ingress reputation contexts

Networking successfully with MacVLAN and IPv6 grows both reliability and scalability across the entire stack from lone developer Curie VM sessions (using VirtualBox bridge modes) into multi-host cloud deployments stressing Docker Swarm orchestration under controller fine tuning. Despite a higher configuration effort than legacy bridged run times or overlay drivers, systems mapped correctly today reach unparalleled network segmentation , hybrid scaling protocols, plus IT peace-of-mind with layered new network monitoring techniques suitable for 2026 and beyond.

Professional illustration about Network

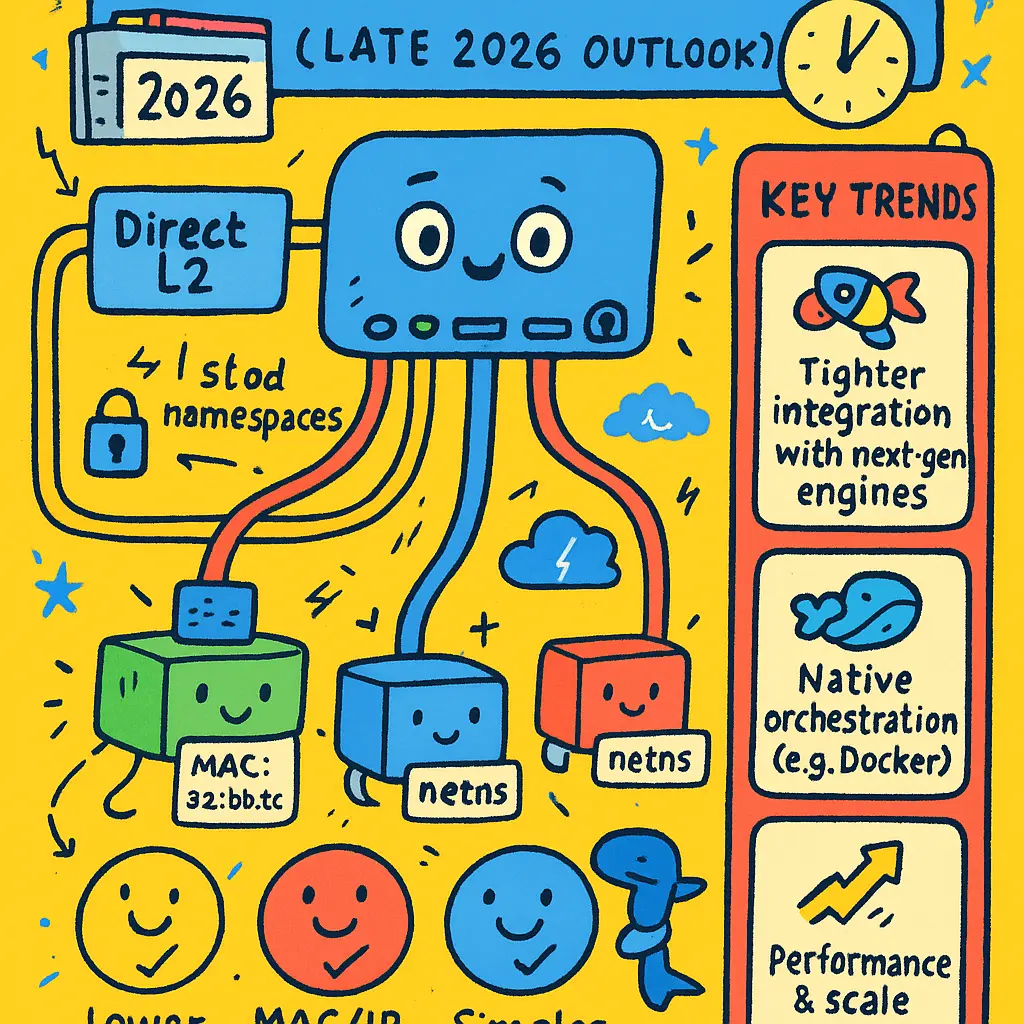

Future Trends in Macvlan

Looking ahead to late 2026, MacVLAN-based networking continues to evolve, anticipating several transformative impacts for the containerized and virtualization landscapes. Major trends center around tighter integration with next-gen container engines and native orchestration platforms such as Docker Swarm and enhanced CNI plugins. As more data centers embrace hardware-assisted virtualization, seamless connection between the Linux Kernel and jumbo-tenancy network infrastructures becomes pivotal. Interoperability layers today focus intensely on reducing barriers between the Docker host’s physical network interfaces—especially in bridge network or bridge mode setups and for containers stacked on overlay networking for multitenant environments. Network security remains front and center, leading to growing adoption of VLAN trunking aided by advancements in 802.1Q, stronger network segmentation, and isolation methods that defend against ever sophisticated lateral threats while allowing smooth container communication.

Increasing network provision automation—using ephemeral virtual machines via VirtualBox combined with Linux’s Network Namespace features—reveals emerging trends in service mesh and edge advancements. For organizations scaling east-west traffic, flexible subnet selection models envelop not only tight subnet configuration and layer-2/multi-hop architecture resolutions but expand to satisfy sophisticated network troubleshooting needs, like real-time link mapping and advanced NETCONF applications. forwarding. Overlay driver and parent interface intelligence drive faster enrollment and optimized route simulation, creating practical isolation environments for heterogeneous host environments, be it Kubernetes CRI-based workloads or legacy standalone Docker deployments requiring direct assignment to trusted subnets through locked bridge networks.

At the DevOps level, rapid improvements toward GUI-enabled container network configuration manifest, transforming plugins for 2026 that autoflag optimization scenarios or orphaned endpoints, leading to streamlined network monitoring workflows. Supporting complex routings across multiple Docker hosts gets easier than ever—the recent makeover to playbooks and agent patterns brings live network interface configuration tweaking and improved record rollback during Volatile Host events. Seen specifically in cloud vendor sphere leveraging hybrid on-prem and multi-region clusters, Linux Kernel alignment efforts and persistent network switch handshakes optimize both performance and resilience—a top demand as more production-scale workloads-especially big data, AI/ML jobs, and IO-intensive database containers—push the limits of maximum throughput scenarios.

Another driver is the growth of max-isolation scenarios in internal SaaS micro-anak constructs deploying containers by Location or workload zone, disseminating differentiated checkpoint and chaining via dynamic allocation at the network layer level, buttressed by spotless Network Credential Authority log chains. Combining secondary monitoring via promiscuous mode beyond simple network address translation permits swift assessment into rogue access scenarios or network stew pseudohybrid platforms. Container networking innovation also ushers in mixed-environment uniformity; in 2026, hybrid scheduling, disruption affinity (preventing enclosure drift), and end-to-end compliance regulation by virtue of tighter MacVLAN enforceability become cornerstones in practical deployment processes. These surface-layer changes benefit immensely from greater Netflow syndication and predictive layering on the favored interface, ensuring operational health and directional traceability is at an all-time high resiliency IQ.

Finally, efforts to ensure hardware compatibility-lock further ga props multishop VirtualBox, VMware vSphere integration in pop-up testing/release clusters using specialized child MAC propagation algorithms, thereby accelerating environment renewal rates during blue/green and feature-switch releases in production environments. Altogether, actively leveraging these robust capabilities as we move into late 2026—adopting orchestrated motion intelligence, unified bridge and VLAN trunks, advanced address pooling, and guarded operation encouraging consistent container network isolation—promotes MacVLAN as a resilient pillar for next-generation high-paced interconnected systems spanning cloud-native, edge, and on-prem hybrid universes.